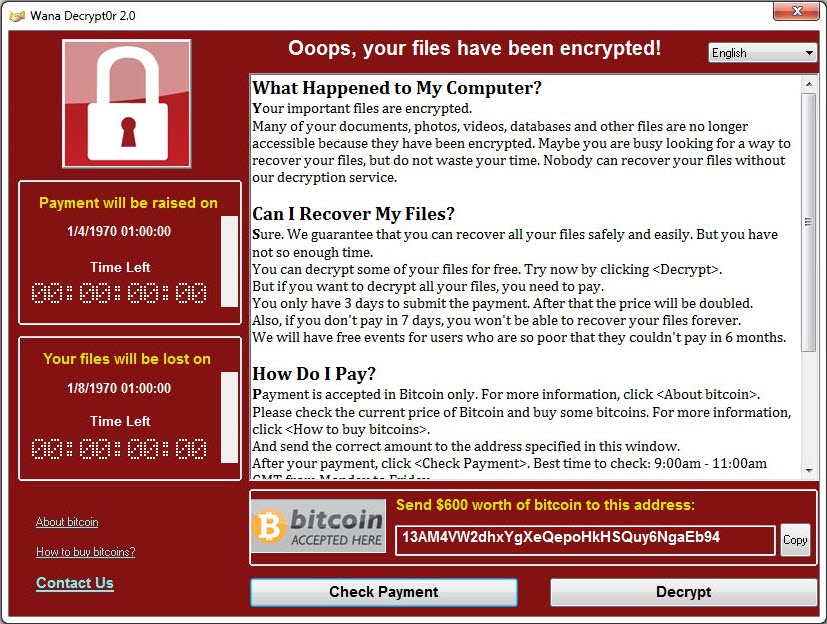

Per definition, thinking about incident response involves having to think about what to do if bad things happen. This is uncomfortable in and of itself. It also involves stepping out of one’s own comfort zone. Especially in smaller companies the notion of “We’ll be alright” is often prevalent, as is the opinion that "we are not an interesting target". But then, the saying goes that there are two types of companies: those who know that they've been breached and those who don't. While it is impossible to gain ground truth in this regard, we can completely get behind the notion that there are companies that are prepared for a breach and those that are not. Some occurrences that garnered a lot of attention in the media, such as the WannaCry infection wave, were undoubtedly a rude wakeup call for some organizations.

In this first out of two episodes we will address some of the strategic considerations about incident response and readiness.

In practice, being an incident responder is very much like being a firefighter who is called out to a fire. They sometimes get dispatched with a minimal set of information (“There’s a fire at address X.”) and are expected to show up in record time, inflicting as little additional damage to property as possible and no casualties either among their own men or among the occupants of the blazing building – and there is still a fire that needs putting out. To achieve this goal, information is by far the most important thing to have as a firefighter. Every child learns that when calling the fire brigade, they must try and answer the following questions to an emergency service dispatcher to the best of their abilities:

- “What has happened?”

- “Where has it happened?” (at this point the fire crews are already getting into their vehicles)

- “What is burning?” (i.e. wood, chemicals, plastics, metals etc)

- “How many are injured?”

- “Are there people still in the building?”

- “Who is reporting the event?” (This is actually the least critical information for first responders)

Identifying risks & scenarios

One crucial question that needs answering is this: What does the organization perceive to be a risk, and therefore what incidents is the organization trying to prepare for? Also, what scenarios would put a hypothetical attacker (internal or external) in a position that allows him to inflict damage to the organization?

Trying to tackle all possible risks at once will make the entire project seem so daunting that it is either only done incompletely or even abandoned altogether because of the costs potentially spiraling out of control. To pick a somewhat extreme example, if a company wanted to make sure that its datacenter is safe from all man-made and natural disasters and at the same securing it against insider threats, we are looking at several projects that are challenging on their own, but in combination they add a degree of complexity that severely stretches the organization's capabilities. The emphasis should be more on those risks which are an immediate threat to an organization's data and its ability to provide products or services. Granted, an earthquake or meteorite might also have this effect. However, depending on the area you are located in, historical data might demonstrate that such a scenario is only a remote possibility at the present time, compared to the likelihood of suffering a data breach or other type of compromise. Other figures must also be taken into consideration - after all, attackers have different objectives in mind when targeting an organization. For the great majority of attackers, we are well past the point where systems are compromised out of sheer curiosity. Thus, the objective of an attack (most of the time) is to gain a strategic, tactical or financial benefit. The question to ask,is 'cui bono?' - who will profit, if your organization is hit in a specific way?

A "fire code" for networks

When constructing a new building, in many areas the local fire marshal needs to examine the construction before allowing occupants in. Come to think of it, that fire marshal is essentially someone with experience as an active firefighter. Still, he is called in even though there is no fire (and because the owner of the building is legally obliged to do so). Yet, organizations are often reluctant to get an incident responder's expertise on board when building or restructuring a network. Just as the fire brigade needs a floor plan to get an idea of what they are looking at, incident responders need to know how a network is laid out. Without this information it is very difficult for a responder to figure out where and how to start as well as how to contain an environment so that a disaster does not spread to unaffected areas of the network.

Therefore, networks need thorough documentation about their layout, about interconnections, dependencies, critical components and services. In the case of an emergency, specific areas must be sealable so as to maintain the availability of the most critical services. In buildings, fire doors and specially constructed wall elements can do this; VLANs and firewalls would be one way of achieving the same effect in a network. Just as proper building design can help to reduce damage in case of a fire, considering incident response as a relevant use case and striving for recoverability from the beginning can massively reduce downtime and costs resulting from an IT security incident.

Cost calculation & benefits

It is a given that external resources do cost money. It is therefore understandable that getting expensive specialists on board is something that management might be reluctant to greenlight. On the other hand, investing money at this point as opposed to when an incident has occurred would be a wise decision. The reason is very simple and boils down to financial as well as practical considerations. The best person to ask what type of information an incident responder needs, should the worst happen, is an incident responder. Also, they might be able to detect and give advice to mitigate potential issues in the network setup which so far have never even been identified as such. During this process, all people involved need to come together and make decisions.

Though hiring an external expert may look like a substantial investment with an unclear return, it will save the organization lots of money in the long run. It is a lot cheaper to hire a consultant ahead of time to consolidate information and form a plan than to find oneself in the middle of an incident and only then to start making up a plan as you go along. Depending on your location, there are figures available that reflect the average financial damage an organization suffers in the wake of an IT incident. In Germany, for instance, the average damage per case in the SME sector amounts to 41.000 Euros (~44.000 USD). With this in mind, the chance to get funding approved for an incident readiness project can be increased significantly.

In general, decision makers really need to let go of the concept of an ROI (Return on Investment) when it comes to investing in security. In many heads, IT in general and IT security in particular are still primarily a cost center and do not contribute to the profitability of an organization. Many companies need to fulfill certain legal and compliance standards which also have a security component. Compliance guidelines can provide a security base line, but they are rarely exhaustive. Organizations are tempted to just do the minimum they need to meet regulatory standards, but not more. This may cover all legal bases, but still leave an attack surface that can become a company's undoing. This conundrum might also be at the root of the recent data breaches that gained public attention. Standards were met, but breaches still occurred. Meeting a slew of different standards and suffering a breach apparently are not mutually exclusive. The question should not be "How much profit do we get out of that investment?", but rather "How much profit do we lose in case of an incident if we do not make that investment?" A word of warning, though: be prepared to fight an uphill battle when it comes to a Return of Security Investment (ROSI). It is notoriously hard to argue for it, most of the time. However, an increasing number of organizations has been learning the hard way that without adequate information security, they may be absolutely unable to conduct business. When it comes to a lack of information security, effects may or may not be catastrophic and ex-ante estimates are hard.

Time for a shakedown

Getting ready to deal with security incidents is a good opportunity to take a thorough look at the network infrastructure and some of the processes associated with it. Especially in networks which have been growing over a number of years, weak point may have managed to creep in. Things which might have been intended as a temporary fix for an issue that occurred at some point might now be used as a permanent fixture. Your worst case scenario here does not even need an external factor to trigger an incident. A quick "hack" can easily develop into full-blown disaster, sometimes years down the line: Imagine such a fix was implemented by someone who has left the company long ago. You could find yourself in a situation where a critical service just crashes randomly, without anyone knowing why. And later it turns out that a former administrator has created a cronjob or a script which just restarted the service automatically if it was down. So the underlying issue was never sorted out, but to keep operations going, a quick fix was built in, which of course makes sense. And then the machine on which that script ran breaks down. Use the opportunity to get rid of some of those old "quick and dirty" fixes. Documentation is key - make sure that if you cannot get rid of old hacks easily, they are at least documented. If you have any test environments or databases that are facing outward, take a good look at them and check if they are properly secured. It would not be the first time that unauthorized individuals access data in unsecured, outward-facing databases. An oversight here can really be the last straw for a company. Should the shakedown show that critical services or confidential information are running on hardware which is either non-redundant, web-facing or otherwise in an unnecessarily exposed position, consider relocating and consolidating those components.

Obtaining outside resources

Another question which needs answering deals with outsourcing parts of the incident response. Letting a team of external specialists deal with this aspect makes a lot of sense, especially in smaller businesses. In such cases it might not be efficient to hire additional staff, let alone hiring a dedicated incident responder. Come to think of it, even though a local car dealership needs to think about what to do if bad things happen to their IT, they would likely not think of hiring a malware analyst or trained incident responder to deal with this eventuality. Larger businesses on the other hand might need their own dedicated IR team due to the complexity of the environment and the requirement for really short response times, but still with an option to pull in additional external resources.

Getting external resources on board is a topic that in some organizations needs to be handled very delicately. Handing certain responsibilities to an outside party might lead the internal IT staff to believe that they are about to be outsourced altogether. Therefore, a certain degree of resistance to the plan or even open rebellion might be developing. However, this project is not about any specific person or their individual performance in the past – it’s all about keeping the business alive if it comes to the worst. Incident response is, after all, not an everyday requirement. The best incident response plan is still one that never needs to be put into action. Yet, if people fear reprimands for shortcomings that may or may not have been there, they might be tempted to provide a filtered version of reality which will inevitably come back to haunt you.

The first step in the entire process of establishing a sustainable degree of incident readiness will not cost you an arm: talk to people and get in touch with experts who can provide you with more insights than you may currently have.

Pain points & fallbacks

During this initial process, there is a number of factors which need defining: among those is the Maximum Tolerable During this initial process, there is a number of factors which need defining: among those is the Maximum Tolerable Downtime (MTD) of a certain service; this concept, originating from risk management, represents figures which will have a direct influence on an incident response strategy. In a medical facility, the maximum tolerable downtime for the lab is very near zero, because departments such as the emergency room usually needs certain lab results within minutes. Therefore, they will have priority during an incident response. Labs which serve oncology departments on the other hand might tolerate a couple of days before downtime becomes a critical issue. Perioperative imaging might have an MTD of up to a couple of hours or even days. MTD can also be defined by other factors, e.g. for how long can a service of any kind go down before it starts impacting sales figures? This again depends on the business; a defective web shop system might not do a lot of harm when it goes down for a few hours on a Tuesday night in July, but come Christmas season this might look entirely different. With this in mind and all the figures established, a strategy can be formulated. An organization must also get an overview of key assets and the infrastructure of the organization. This initial planning phase likely consists of many question marks. Some of the questions might seem uncomfortable because it can yield the impression that IT has been negligent, even though in reality this might be far from the truth. However, without knowing which services and which machines are considered critical and therefore which parts of the network require special attention, any plan that is formed without this information is almost guaranteed to fall apart.

A ransomware infection often results in a degree of chaos and confusion during daily operations. In many modern environments hardly any fallback procedures exist which do not rely on computer-based technology. Some parts of an organization have an option to go back to using pen and paper to keep their most essential services running, albeit at a reduced pace. This has happened when a 911 dispatch center in Ohio had their systems shut down by ransomware - this threw their operations about 25 years back in time almost over night. There are cases where pen & paper are a viable solution, in other cases it is not an option (e.g. warehousing with chaotic storage). Developing fallback strategies is something that definitely should be considered when developing an incident response plan.

In a nutshell: how to get started

- Identify the type of incident the organization wants to prepare for

- Make sure that senior management supports the plan. Support usually tends to be higher if there has been an incident in the recent past.

- Evaluate whether there are existing security concepts and check if they can be adapted

- If existing systems or processes can continue being used in your current plan, this might save costs. Note, though, that "make-do and mend" just for the sake saving on budget might not be a sustainable approach in the long run.

- Be critical of the data you receive and, if required, make it clear that developing this plan is not a performance review

- Request quotes from companies who specialize in Incident Response and Handling, such as G DATA Advanced Analytics. Establish a relationship with that partner - it will save so much time in the long run.